Our research spans three main categories, each covering aspects of applications, algorithms, compiler, architecture, VLSI, and silicon prototyping:

- A — Domain-Specific Hardware Accelerators

- B — Fundamental Abstractions for Agile Chip Development Methodologies

- C — Tools and Frameworks for Agile Chip Development Methodologies

Our goal is to build performant and efficient domain-specific hardware accelerators and also to go a step further by designing them using new fundamental abstractions and agile tools and frameworks that make these systems more resilient to fast-paced changes in algorithms and applications.

Current Research Projects

Specializing Communication at the Inter-Chiplet Boundary for Energy-Efficient Machine Learning ( A, C )

An open chiplet ecosystem would allow heterogeneous integration of chips in a compact, advanced package. Building a chiplet ecosystem represents a tremendous paradigm shift in the time and cost of assembling future computing systems and constitutes a key thrust in the CHIPS and Science Act research strategy. How can we build chiplet-based systems to be simpler, faster, and more efficient when domain-specific hardware accelerators are communicating across inter-chiplet interfaces?

A New Cross-Layer Energy Abstraction for Design-Space Exploration Tools Driven by Generative AI ( B )

New foundational advancements in machine learning and generative AI techniques are creating excitement for a powerful new generation of design automation tools. However, some aspects of modern design flows remain very manual and are not yet amenable to automation. Unfortunately, the challenge of designing and verifying energy models is very significant. How can we design new cross-layer abstractions to allow automated tools to reason about energy?

Rapid Runtime Reconfigurable Arrays for Wideband Spectrum Sensing ( A, B, C )

Commercial and military demands on the electromagnetic spectrum are driving RF systems to operate in increasingly congested and complex environments. These systems must analyze large volumes of continuously streaming data, detect and characterize waveforms, and wake up downstream decision-making applications, all within unknown environments. We will build and ask fundamental questions about how to build compute-dense runtime reconfigurable arrays with fast and flexible program switching controlled by embedded real-time schedulers.

Using Formal Tools to Automatically Detect Late-Stage Timing Bugs in Hardware ( C )

Timing verification complexity is rapidly increasing for modern accelerator-rich SoCs which may have hundreds of clock and power domains. While static timing analysis (STA) covers most of the complexity, gate-level simulation (GLS) must still be run to find late-stage timing bugs that can potentially brick a chip. Unfortunately, dynamic solutions (like GLS) simply will not scale to more complex designs. How can we address this gap, and can modern, sophisticated formal tools help?

An Agile Software/Hardware Design Methodology

for Coarse-Grained Reconfigurable Arrays ( A, B, C )

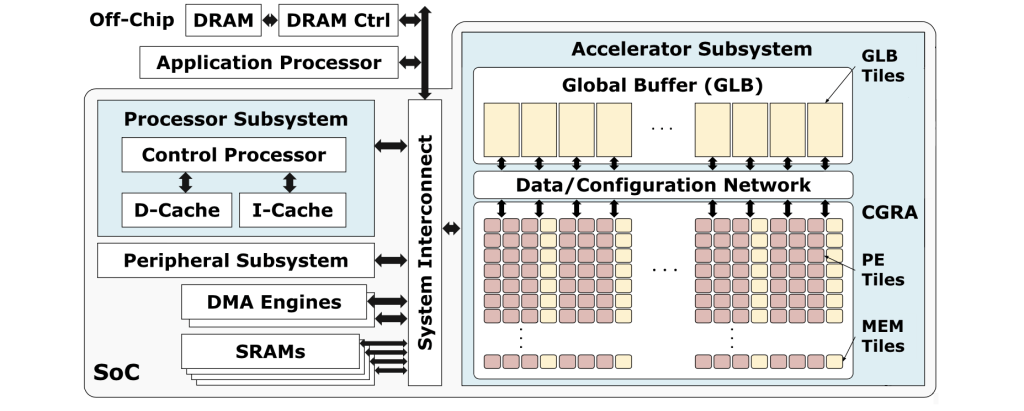

Coarse-grained reconfigurable arrays (CGRAs) are architectural templates that allow exploration of a large design space through specialization of the compute and memory elements inside the array. This allows CGRAs to be specialized to accelerate many different domains of applications. On the other hand, ASICs typically target a very limited number of applications, and FPGAs are fine-grained and suffer from low efficiency. This project explores an agile approach to the design of CGRA compilers and accelerators. This ranges from techniques for building automated mapping tools to exploring how to give the compiler control of power gating and dynamic voltage and frequency scaling (DVFS) in order to save power and energy.

- Silicon Prototypes: [ JSSC 2023 ] – [ VLSI 2022 ] – [ Hot Chips 2022 ]

- End-to-End Software-Hardware Toolchain: [ TECS 2022 ] – [ DAC 2020 ]

- Compiler-Controlled Power Gating and DVFS: [ TRETS 2023 ] – [ HPCA 2021 ]

- Best Demo Award at VLSI 2022!

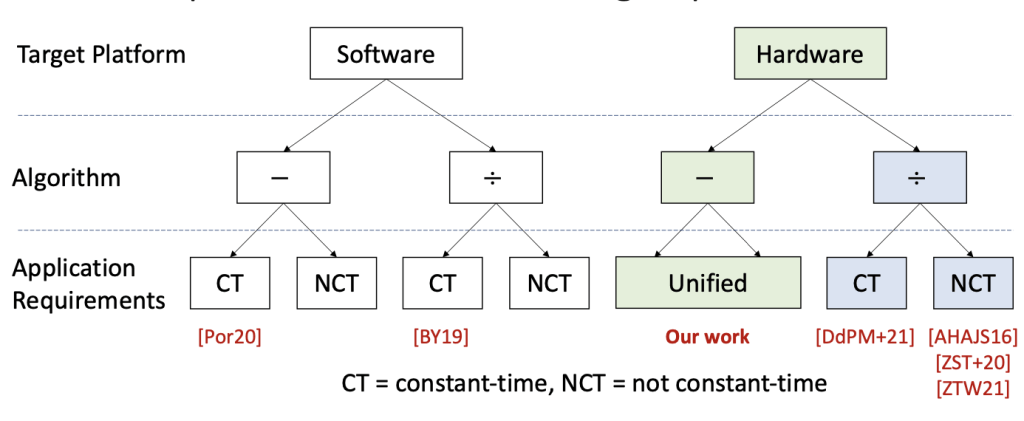

Hardware Acceleration for Advanced Cryptography Primitives ( A )

We are building the fastest hardware accelerator in the world for the extended greatest common divisor computation (XGCD) which is used for modular inversion, a primitive operation in elliptic curve cryptography, and verifiable delay functions, a new cryptographic primitive for proofs of sequential work. VDFs are being considered in major blockchains as a replacement for proof of work (e.g., Ethereum, Chia).

- Publications: [ CHES 2022 ] – [ Paper Artifact ] – [ OSCAR 2023 ]

Designing Agile Flow Tools for Rapid Chip Prototyping ( C )

Achieving high code reuse in physical design flows is challenging but increasingly necessary to build complex systems. Unfortunately, existing approaches based on parameterized Tcl generators support very limited reuse as designers customize flows for specific designs and technologies, preventing their reuse in future flows. We present a vision and framework based on modular flow generators that encapsulates coarse-grained and fine-grained reusable code in modular nodes and assembles them into complete flows.

- Silicon Prototypes built by mflowgen: 20+

- Recent tapeout classes supported by mflowgen

- Fall 2023 — USC EE 599: Special Topic on Complex Digital ASIC System Design (Intel 16)

- Spring 2023 — Stanford EE 372: Design Projects in VLSI Systems II (Intel 16)

- Spring 2022 — Stanford EE 372: Design Projects in VLSI Systems II (Skywater 130nm)

- Spring 2021 — Stanford EE 272B: Design Projects in VLSI Systems II (Skywater 130nm)

- Recent tapein classes supported by mflowgen

- Spring 2023 — Cornell ECE 5745: Complex Digital ASIC Design

- Spring 2022 — Cornell ECE 5745: Complex Digital ASIC Design

- Spring 2021 — Cornell ECE 5745: Complex Digital ASIC Design

- Publications: [ DAC 2022 ]

- Open Source: [ Github ] – [ Read the Docs ]

Previous Research Projects

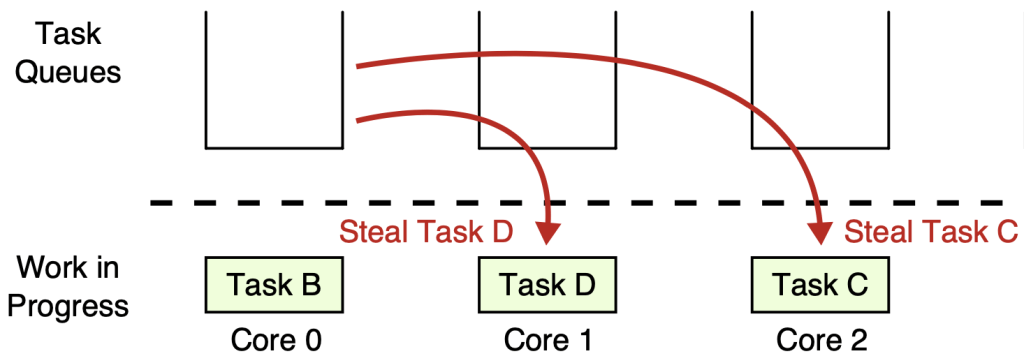

Building Efficient Task-Based Parallel Runtimes for Multicore Systems

Task-based parallel runtimes underpin the parallelization of frameworks for machine learning, graph analytics, and other domains. State-of-the-art graph analytics frameworks like GraphIt and Ligra are designed on top of these runtimes to enable efficient task distribution using dynamic work-stealing algorithms. My work has improved the performance and energy efficiency of these runtimes with a cross-stack approach that exposes runtime-level information to the hardware to control architecture- and VLSI-level mechanisms (ISCA 2016). However, walls of abstraction often make it challenging to pass information through layers of the computing stack. I worked on a systematic approach to convey the abstraction of a “task” from the runtime directly to the underlying hardware (MICRO 2017). I designed and fabricated BRGTC2, a 6.7M-transistor chip in TSMC 28nm, to collect performance, area, and energy numbers in an advanced technology node to support future research projects based on hardware acceleration for task-based parallel runtimes (RISCV 2018).

Architecture-VLSI Co-Design with Integrated Voltage Regulation

Voltage regulators are responsible for efficiently converting one voltage level into another (e.g., board-level to chip-level). Recent technology trends are making it feasible to replace discrete voltage regulators with integrated voltage regulators, which can significantly reduce system cost by eliminating expensive board-level components. These enabling trends include energy storage elements with better energy densities as well as faster on-chip switches with lower parasitic losses. However, integrated voltage regulators are very large (e.g., similar area as the core it supplies). Together with my colleagues in the circuits field, I applied an architecture-circuit co-design approach to develop a novel technique that dynamically shares capacitance across multiple loads for a 40% reduction in regulator area while still enabling fine-grain DVFS (MICRO 2014). I also contributed to the fabrication of a switched-capacitor-based prototype in 65nm CMOS resulting in a journal publication in a top-tier circuits venue (IEEE TCAS I 2018).

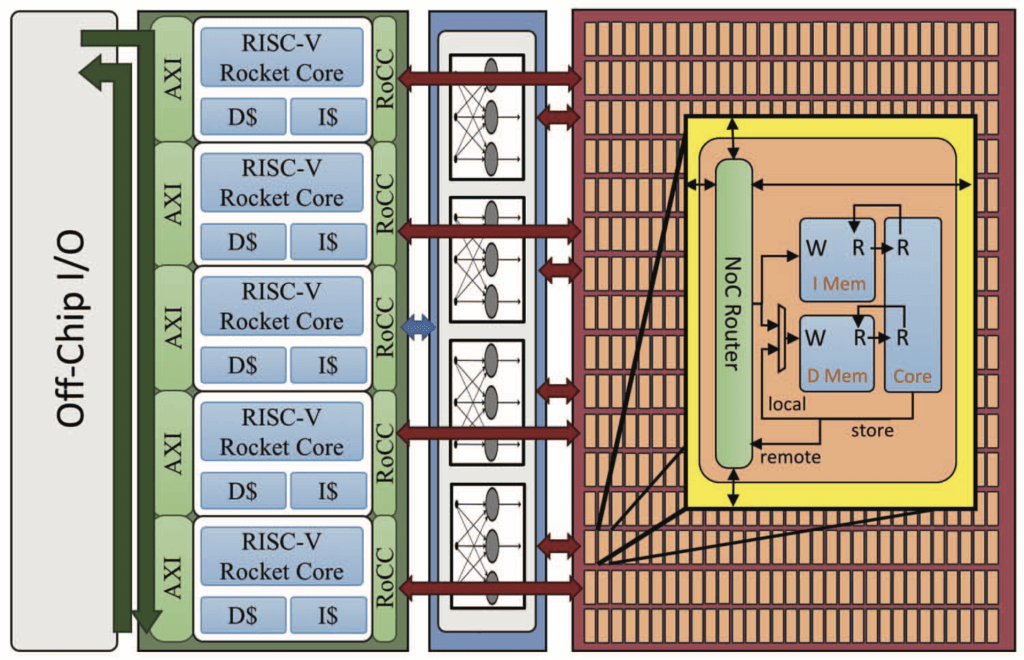

Celerity 511-Core RISC-V Tiered Accelerator Fabric

Rising SoC design costs have created a formidable barrier to hardware design when using traditional design tools and methodologies. It is exceedingly difficult for small teams with a limited workforce to build meaningfully complex chips. I have been involved in a range of efforts to reduce the costs and challenges of ASIC design for small teams based on productive toolflows and open-source hardware. I was the Cornell University student lead on the Celerity SoC resulting in top-tier publications in chip-design venues (Hot Chips 2017, VLSIC 2019), architecture venues (IEEE Micro 2018), and various workshops.